This text is part of the Typography course at Eötvös Loránd University. You are free to reuse it, but please refer to this page as its source. Péter Szigetvári

An ever-increasing portion of texts we produce is not printed any more, but produced, stored, and used electronically. Many texts printed in past centuries are also gradually converted to an electronic format. This transition has many interesting cultural repercussions, but here we only discuss some technical issues.

As opposed to traditional texts (written or printed on paper or some other material), electronic texts are rather easy to modify, to store, to duplicate and distribute. However, they may be more difficult to produce and are more difficult to access. To access texts on paper, all one needs is proper lighting. To produce and access electronic texts, one needs an electronic device, power, software, and agreed upon encoding. We will here discuss the last one of these prerequisites, encoding.

A character, say the letter “a”, may be stored on the disk of a computer in two ways. You could specify the area that this letter occupies and then specify which parts of that area are the foreground (ink) and which parts are the background (paper). This is a foolproof way of giving the exact shape of the character you would like to see. But this method has serious drawbacks. Take, for example, the following five characters:

a a a a a

Not only is the area covered by these five letters different, the arrangement of ink- and paper-coloured dots also differs rather significantly. The letter in the middle has a shape that could even belong to a letter unrelated to the other four.

Of course, we know that this is not the case, these five characters are different manifestations of the same letter. If you wanted to find all occurrences of the word ant in a text, in the overwhelming majority of cases it would be irrelevant whether it occurred as “ant”, or as “ant”, or as “ant”. Furthermore, it would require a rather complex expression if you wanted to specify the ink-coloured dots that you were searching for in the word “ant”.

So a more obvious method is to assign a label to the concept of a-ness and refer to all a’s by that label. Computers are only capable of storing numbers and that only in binary format (also check out this practical introduction). Thus an obvious way of representing a character is a number. For our purposes, it is enough to know that a computer works with numbers between 0 and 255, such a number is referred to as a byte. Each byte contains eight bits (binary digits, that is, a 0 or a 1). 2⁸ = 256, which is how many different values a byte may have.

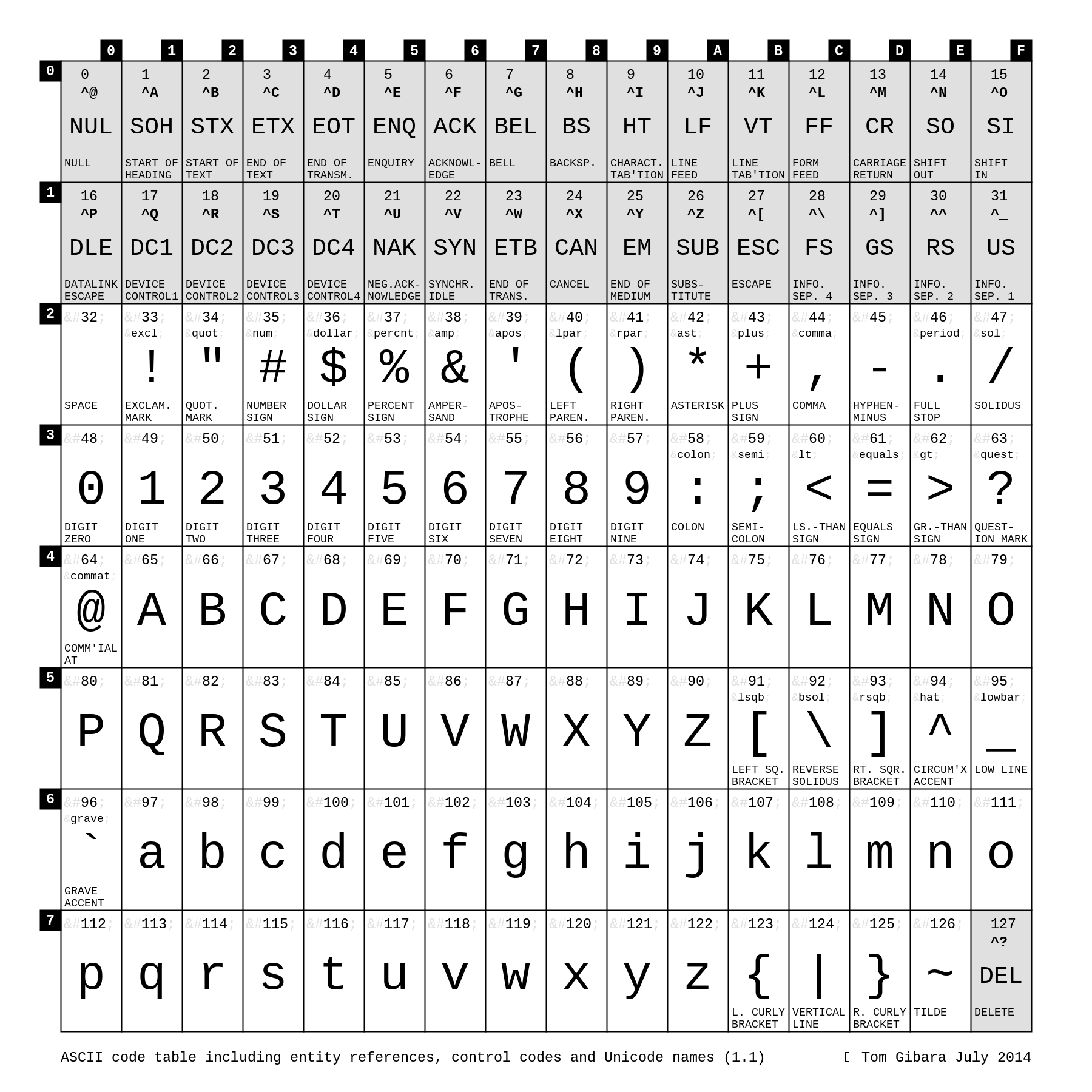

The character “a” happens to be assigned the number 97. This number is not a very obvious choice if you look at its decimal format, but it makes more sense in hexadecimal format, 0x61 (by convention, hexadecimal numbers are prefixed by “0x”). In the following table, you can see “a” in row 6, column 1 (that is in position 0x61; the decimal format of each number is also given at the top of each box; hexadecimal numbers are more readily comprehensible for humans than binary, and at the same time they are easily converted to binary).

You can see that the remaining lower case letters after “a” occupy the following positions, up to 0x7A. The upper case version of each letter is assigned a different number, these are two rows above each lower case letter, thus “A” is 0x41 and “Z” is 0x5A. The chart also contains the ten digits, punctuation characters and some symbols. Only 95 of the 128 numbers are assigned to printable characters (these cells have a white background), the others are control characters, like TAB or NEWLINE (though this is more complicated, but not our present concern).

This encoding, using numbers 0–127 (0x00–0x7F), was agreed on in the 1960s, and is referred to as ASCII (pronounced [áskij]), more specifically 7-bit ASCII, since it uses only 7 of the 8 bits in a byte (2⁷ = 128). It is almost universal in computing today, so these are the characters that will never get messed up in the rendering of electronic text.

The letters included in the 7-bit ASCII set are only those that occur in English texts, hence it is also called US-ASCII. Texts of most other languages that employ Roman characters, like French, Spanish, Danish, Slovak, or Hungarian, use further letters with diacritics, like, for example, “á”, “ç”, “ñ”, “ø”, or “š” (for a detailed discussion, see topic 8). Also, 7-bit ASCII only includes an undirected single (', character 0x27) and an undirected double quote mark (", character 0x22), while in a properly typeset text these come in opening and closing pairs (‘…’, “…”). And there are plenty of other symbols that a typographist needs: not just a single hyphen (character 0x2D), but also an en-dash (“–”), an em-dash (“—”), a minus sign (“−”), etc. Also missing is the Spanish-style inverted question mark (“¿”), the per-mille symbol (“‰”), the pilcrow (“¶”) and the section sign (“§”), the less-than-or-equal-to sign (“≤”), etc, etc. (See topic 7 for more details.)

The first idea was to assign the remaining numbers, 128–255, to these symbols. The problem is that there are way more characters to accommodate than the available 128 slots. Therefore different sets were assigned to the same slots. These different sets are referred to as code pages. So whereas on one code page (namely, CWI-2) the slot 0x93 (that is, decimal 147) is assigned to “ő”, on another one (namely, 860) the same slot is assinged to “ô”. While this difference may be acceptable, the first example code page has “Ű” in slot 0x98, the second one has “Ì” in this position. Both these pages have “β” in position 0xE1, a third one (namely, Windows-1250) has “á” in this position. You probably see the problem: if a byte stores the number 225 (0xE1), the computer has to decide whether to put “á” or “β” on your screen. For this decision the code page has to be given. It’s like encrypted text which must be accompanied by a key (the code page used for the encryption). If you use the wrong key, you will get garbage.

The present webpage is encoded in UTF-8 (more on which below). For the sake of illustration, I have lied to my browser that its encoding was ISO 8859‑1 and took a screenshot of how it rendered the previous paragraph based on this false information, which I have inserted above. You can see that any character outside US-ASCII — the en-dash in the first line, the directed quotes, the letters with diacritics, the Greek letter “β” — fails to be rendered as intended. Letters without diacritics, digits, and basic punctuation marks — the period, the comma, the parentheses — are fine, since the US-ASCII convention is universally assumed by computers.

It is impossible to squeeze all the relevant characters into the 256 slots available in a byte, where 128 slots are already taken by the standard US-ASCII symbol set, as we have seen. The solution turned out to be what was also applied in many European writing systems: digraphs. English has [s] and [ʃ], but since Latin did not have this contrast, there is a single letter, S, in the alphabet. So for the other sound English typically uses a letter combination, SH. (The same problem arises with digraphs in spelling systems as with code pages above: other languages use other digraphs, CH, SZ, SJ, or various other encodings.)

Using the idea of digraphs, Unicode uses one, two, three, or even four bytes to encode characters. We have seen that a single byte may take 2⁸ = 256 different values. Two can accomodate 216 = 65,536 (= 256 × 256) different values, while the number of possibilities with four bytes is 2564 = 232 (which is a figure over 4 billion). This allows a universal standard that includes about just anything from IPA, Greek, Cyrillic, Arabic, Hebrew, Devanagari, Japanese Katakana and Hiragana, all Chinese ideograms, ancient scripts, with all imaginable diacritics, ligatures (more on which in topic 8), and an increasing number of emojis to be assigned a unique slot. This system is referred to as Unicode, and seems to become the universal standard for text encoding (ISO 10646). Here are some examples:

| character | hexadecimal | decimal | size in bytes |

|---|---|---|---|

| á | 0xE1 | 225 | 1 byte |

| Ł | 0x141 | 321 | 2 bytes |

| ə | 0x259 | 601 | 2 bytes |

| я | 0x44F | 1103 | 2 bytes |

| ボ | 0x30DC | 12508 | 2 bytes |

| 😍 | 0x1F60D | 128525 | 3 bytes |

One problem with digraphs is that sometimes a combination of symbols that is used as a digraph is not in fact a digraph, for example, while in the word bishop the SH represents [ʃ], in another word, mishap it represents the combination of [s] and [h], not [ʃ]. Unicode has various ways of taking care of such ambiguities, accordingly there are several versions, of which UTF‑8 has become the most widespread on the web (its current share in web documents is around 98%), as the following chart shows (“W Europe” is ISO 8859‑1 mentioned above).

There exist other ways of encoding characters outside the ASCII range, but they are gradually losing their relevance. We just mention two here. Both apply the “digraph” idea and use exclusively ASCII bytes.

Prior to the rise of Unicode, web documents used HTML entities (this is the “ASCII only” red line in the chart above). These begin with the symbol “&” and end in “;”, with some brief mnemonic description of the character in between. Here are some examples (and here’s a full list):

| character | entity name | entity number |

|---|---|---|

| ¶ | ¶ | ¶ or ¶ |

| á | á | á or á |

| ñ | ñ | ñ or ñ |

| ə | none | ə or ə |

| — | — | — or — |

| “ | “ | “ or “ |

“Para” and “mdash” should be obvious, “aacute” means “an a with an acute accent”, while “ntilde” is “an n with a tilde”. “Ldquo” stands for “left directed quote marks”. The same characters can also be encoded by their unicode numbers in either decimal (first) or hexadecimal (second) form. This encoding may come handy in case you are unable to type the relevant unicode symbol on some keyboard.

Another ASCII-only character encoding system you may come across is that introduced by a professional typesetting system called TeX. The main character here is the backslash, “\”, but there are many “digraphs” used as well. We exemplify some of the characters above in TeX:

| character | TeX’s encoding |

|---|---|

| ¶ | \P |

| á | \'a |

| ñ | \~n |

| — | --- |

| “ | `` |

The main advantage of TeX (and its derivates, like LaTeX) is that anything can be customized, so ultimately the user can use any character or string of characters to mean whatever they prefer.

So we now have an idea of how characters can be encoded electronically. However, there are further properties of texts that go beyond strings of characters. The following are four instances of the same character string (the same word), but they may serve different purposes in a text:

1 typography 2 typography 3 typography 4 typography

String 1 is “normal” text called roman type. String 2 is italic type, imitating handwriting. String 3 is boldface, more visible symbols containing more ink. String 4 is monospace, imitating a typewriter, where each character is of the same width. In addition, there are further types, but these four are enough for illustration.

Clearly, it would not do to assing a different number to “t”, “t”, “t”, and “t”, and each of the other characters, being bold or italic is not so much a property of a character, but of longer stretches of the text. So instead of marking this property character by character, we can insert marks in the text indicating things like “italics begin here” and “italics end here”. This is called markup. As you might guess there are dozens of different markup conventions, we will only discuss a few widespread ones here, to give you an idea.

Consider the following sentence and its marked up versions:

Typography tells you how to typeset “23 < 32”, but not if it is true.

HTML

<b>Typography</b> tells you <i>how</i> to typeset “2<sup>3</sup> < 3<sup>2</sup>”, but not if it is <kbd>true</kbd>.

TeX

{\bf Typography} tells you {\it how} to typeset ``$2^3 < 3^2$'', but not if it is {\tt true}.

Wikipedia

'''Typography''' tells you ''how'' to typeset “<math>2^3 < 3^2</math>”, but not if it is <code>true</code>.

Vanilla Markdown

**Typography** tells you _how_ to typeset "2<sup>2</sup> < 3<sup>2</sup>", but not if it is `true`.

You can see that HTML (webpages) primarily uses tags like <b> for ‘boldface begins here’ and </b> for ‘boldface ends here’. Since HTML uses the characters < and > for its tags, when you want to specify a real ‘less than’ sign, you have to use the entity <. TeX uses braces, { and }, for enclosing domains and again the backslash, \, for specifying that the string in the domain should be boldface, italics, or typewriter type.

Wikipedia’s markup and a recent, but more and more popular markup language called Markdown aim at being simpler, better readable. People often use stars (asterisks) for emphasizing parts of their text, that is exactly what Markdown does as well. (Here is a recent intro.)

We obviously cannot get into the details of any of these markup languages in the frame of this course, but it is important that you know about the existence of such systems. We will come back to the importance of markup languages below.

It is important to note that we mark certain portions of our text as boldface or italics for a reason. Both of these font styles (actually boldface is a font weight) are used for emphasis. In fact, HTML has two ways of marking something as “boldface”, <b>, which we have already seen, and also <strong>. Similarly, beside <i> we also have <em> for emphasis.

More importantly, there are several reasons to emphasize some bit of text. One such reason may be that it it the title of some part of the text, say a section title. In this case, it is not enough to simply enclose that line between a pair of tags like <strong>…</strong>. A line that is set in boldface may tell a human that this is a section title, but it is not necessarily obvious to a machine. However, electronic texts are not only read by humans, but also by machines. Imagine that you want to generate a table of contents based on your text. Your machine will know which bits of your text are section titles only if you mark them as such. The line above, 3. Encoding structure, is a section title, which is enclosed in a pair of tags <h2>…</h2> (if you do not believe me, just click on “View Page Source”, sometimes bound to Control-U, which will show you all the tags I used in this text). The h here means heading, while 2 means it is a second level heading. The title at the top of the page (Electronic texts) is enclosed in <h1> and the titles of the subsections in §1 (Encoding characters) are enclosed in <h3>.

Marking the section titles as section titles is not only important for the production of the table of contents, but also because if someone wanted to print this text (or any other text) on sheets of paper, it would be important to make sure that a page break does not occur right after a section title, since that looks unacceptable. The software that decides where page breaks may and may not occur will know not to put a page break after a section title only if it is aware of section titles.

Simply marking a piece of text as boldface, using <b>, is referred to as physical markup. This does not tell us what that piece of text is, only what it looks like. As opposed to this, logical or (structural) markup hints at the function of the piece of text labelled. The tags for headers introduced above, <h1>, <h2>, etc, are examples for logical markup, they do not tell us what the enclosed text looks like, but what its function is: the title of a section or a subsection.

Some very basic bits of logical markup are available in WYSIWYG word processors (WYSIWYG is an acronym for “what you see is what you get”), like LibreOffice Writer, Microsoft Word, or Google Documents, too. The following screenshot shows some options in the first application. I advise you to always use these options to mark the relevant portions of your documents.

The following is an example of a rather elaborate chunk of logical markup. This is the beginning of the entry quiz in the 1998 edition of Országh & Magay’s English–Hungarian dictionary. (The number at the beginning of each line is not part of the code, it is there only for reference.)

01 <szocikk> 02 <admin> 03 <szerk></szerk> 04 <forr>OL</forr> 05 <statusz st="nyers"> 06 </admin> 07 <foalak> 08 <cszo>quiz</cszo> 09 <kiejt>kwɪz</kiejt> 10 </foalak> 11 <joszt> 12 <nytan> 13 <szf>n</szf> 14 </nytan> 15 <gralak> 16 <anev>pl</anev> 17 <alak>quizzes</alak> 18 </gralak> 19 <jvalt> 20 <jarny> 21 <jel> 22 <ekv>találós játék</ekv> 23 <ekv>rejtvény</ekv> 24 </jel> 25 </jarny> 26 <jarny> 27 <jel> 28 <ekv><min>r</min>tréfa</ekv> 29 <ekv>móka</ekv> 30 <ekv>ugratás</ekv> 31 </jel> 32 </jarny> 33 </jvalt> 34 <jvalt> 35 <jarny> 36 <jel> 37 <ekv><min>US</min><min>isk</min><min>biz</min>szóbeli <elh> vizsga</elh></ekv> 38 <ekv>vizsgáztatás</ekv> 39 </jel> 40 </jarny> 41 …

Practically each word in this text is marked for what it is. The tags are arbitrary strings. <cszo> in line 08, for example, encloses the head word (címszó in Hungarian), <kiejt> in line 09 encloses its pronunciation (kiejtés), and so on. You can see that punctuation marks are missing, these are inserted in a uniform manner. This source only includes unpredictable information. The printed form of this entry looks like this:

Note that some of the labels occur in the printed version differently from the source. In lines 13 and 16, for example, we find n for noun and pl for plural, but in the printed version these tags are converted to the Hungarian abbreviations, fn and tsz, respectively.

Using markup languages instead of WYSIWYG word processors has numerous advantages. For one thing, this is a device-independent way of creating texts. This is important for at least two reasons. On the one hand, always bear in mind that others may not be using the same software and the same operating system as you. More importantly, there is a danger of locking your documents in formats that will one day become outdated. This is quite unlikely to happen in the foreseeable future with plain text files containing markup that does not depend on specific (often proprietary) software.

Furthermore, WYSIWYG technology necessarily merges contents with form. A WYSIWYG document will use some typeface (font) in some size with a certain textblock size, even when this is totally unnecessary. We will see why this a is problem when we discuss issues about text breaking.